SimplicityTheory

Simplicity, Complexity, Unexpectedness, Cognition, Probability, Information by Jean-Louis Dessalles

(created 31 December 2008, updated February 2026)

This has consequences for the computation of

These pages are an invitation to challenge the model they present. All suggestions, critiques and contributions are welcome. Please send reactions to JL at@ dessalles.fr.

An event is unexpected

Unexpectedness:

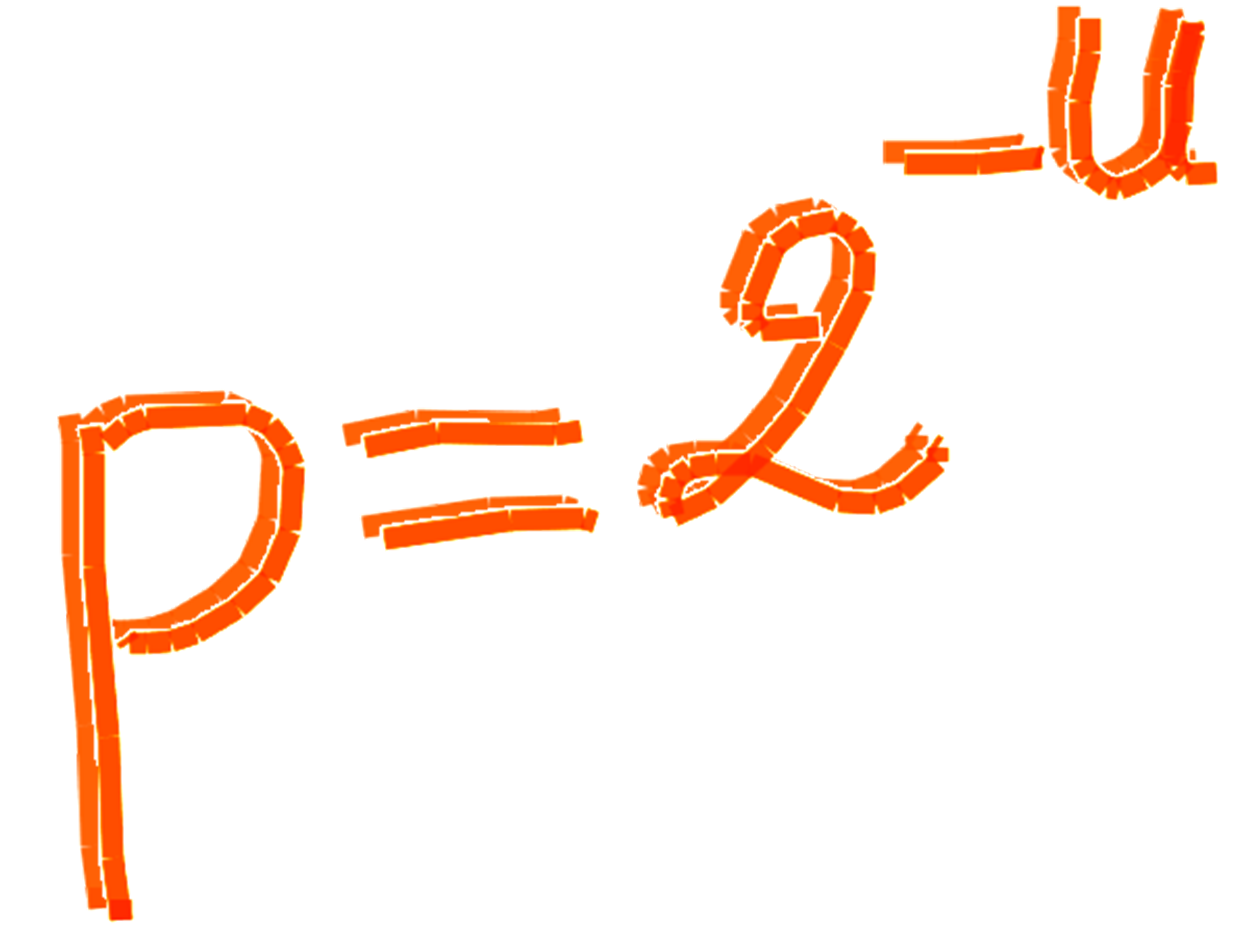

U = Cw – C

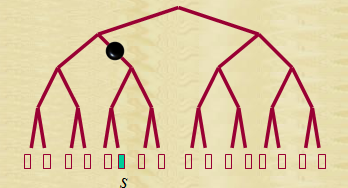

We may consider Cw(s) as the length of a minimal program that the "W-Machine" can use to generate s.

Remark: You’re right if you think that an abnormally complex object may be unexpected. This is consistent with the above definition, as the exceptionally complex object is abnormally simple because of its being an exception (see the "Pisa Tower" effect). Simplicity theory has been used to explain several important phenomena concerning human interest in spontaneous communication and in news (see bibliography below). More should come. Below are a few didactic examples.

p = 2-U

I = U The term of unexpectedness U allows to make several non-trivial predictions about what constitutes valuable information. These predictions include logarithmic variations with distance (see The "next door" effect), the role of prominent places or individuals (see The "Eiffel Tower" effect), habituation effects, the importance of coincidences (see The Lincoln-Kennedy effect), recency effects, transitions, violations of norms (see The running nuns), and records (see The "Robert Wadlow" effect). These theory-based predictions apply to personalized information and to newsworthiness in the media as well. The other fundamental dimension of information is however missing: emotional intensity E. One should rather write:

I = U + Eh This means that emotional events elicit more intense emotions when they appear more unexpected.

Simplicity Theory is presented in the fifth chapter of this great MOOC on algorithmic information:

Chater, N. & Brown, G. D. A. (2008). From universal laws of cognition to specific cognitive models. Cognitive Science, 32 (1), 36-67.

Chater, N. & Vitányi, P. (2003). Simplicity: a unifying principle in cognitive science?. Trends in cognitive sciences, 7 (1), 19-22. Dessalles, J.-L. (2006). A structural model of intuitive probability. In D. Fum, F. Del Missier & A. Stocco (Eds.), Proceedings of the seventh International Conference on Cognitive Modeling, 86-91. Trieste, IT: Edizioni Goliardiche. Dessalles, J.-L. (2008). Coincidences and the encounter problem: A formal account. In B. C. Love, K. McRae & V. M. Sloutsky (Eds.), Proceedings of the 30th Annual Conference of the Cognitive Science Society, 2134-2139. Austin, TX: Cognitive Science Society. Dessalles, J.-L. (2008). La pertinence et ses origines cognitives - Nouvelles théories. Paris: Hermes-Science Publications. Dessalles, J.-L. (2010). Emotion in good luck and bad luck: predictions from simplicity theory. In S. Ohlsson & R. Catrambone (Eds.), Proceedings of the 32nd Annual Conference of the Cognitive Science Society, 1928-1933. Austin, TX: Cognitive Science Society. Dessalles, J.-L. (2011). Simplicity Effects in the Experience of Near-Miss. In L. Carlson, C. Hoelscher & T. F. Shipley (Eds.), Proceedings of the 33rd Annual Conference of the Cognitive Science Society, 408-413. Austin, TX: Cognitive Science Society. Dessalles, J.-L. (2013). Algorithmic simplicity and relevance. In D. L. Dowe (Ed.), Algorithmic probability and friends - LNAI 7070, 119-130. Berlin, D: Springer Verlag. Dessalles, J.-L. (2017). Conversational topic connectedness predicted by Simplicity Theory. In G. Gunzelmann, A. Howes, T. Tenbrink & E. Davelaar (Eds.), Proceedings of the 39th Annual Conference of the Cognitive Science Society, 1914-1919. Austin, TX: Cognitive Science Society. Dessalles, J.-L. & Sileno, G. (2024). Simplicity bias in human-generated data. In L. K. Samuelson, S. L. Frank, M. Toneva, A. Mackey & E. Hazeltine (Eds.), Proceedings of the 46th annual conference of the Cognitive Science Society (CogSci-2024), 3637-3643. Dimulescu, A. & Dessalles, J.-L. (2009). Understanding narrative interest: Some evidence on the role of unexpectedness. In N. A. Taatgen & H. van Rijn (Eds.), Proceedings of the 31st Annual Conference of the Cognitive Science Society, 1734-1739. Amsterdam, NL: Cognitive Science Society. Fortier, M. & Kim, S. (2017). From the impossible to the improbable: A probabilistic account of magical beliefs and practices across development and cultures. In C. M. Zedelius, B. C. N. Müller & J. W. Schooler (Eds.), The science of lay theories, 265-315. Springer. Houzé, E., Dessalles, J.-L., Diaconescu, A. & Menga, D. (2022). What should I notice? Using alogithmic information theory to evaluate the memorability of events in smart homes. Entropy, 24 (3), 346. Leyton, M. (2001). A generative theory of shape. New York: Springer Verlag, 2145. Murena, P.-A., Dessalles, J.-L. & Cornuéjols, A. (2017). A complexity based approach for solving Hofstadter’s analogies. In A. Sanchez-Ruiz & A. Kofod-Petersen (Eds.), International Conference on Case-Based Reasoning (ICCBR 2017), 53-62. Trondheim, Norway: . Saillenfest, A. & Dessalles, J.-L. (2012). Role of kolmogorov complexity on interest in moral dilemma stories. In N. Miyake, D. Peebles & R. Cooper (Eds.), Proceedings of the 34th Annual Conference of the Cognitive Science Society, 947-952. Austin, TX: Cognitive Science Society. Saillenfest, A. & Dessalles, J.-L. (2014). Can Believable Characters Act Unexpectedly?. Literary & Linguistic Computing, 29 (4), 606-620. Saillenfest, A. & Dessalles, J.-L. (2015). Some probability judgments may rely on complexity assessments. Proceedings of the 37th Annual Conference of the Cognitive Science Society, 2069-2074. Austin, TX: Cognitive Science Society. Sileno, G., Saillenfest, A. & Dessalles, J.-L. (2017). A computational model of moral and legal responsibility via simplicity theory. In A. Wyner & G. Casini (Eds.), 30th international conference on Legal Knowledge and Information Systems (JURIX 2017), 171-176. Frontiers in Artificial Intelligence and Applications, 302. Sileno, G. & Dessalles, J.-L. (2022). Unexpectedness and Bayes’ Rule. In A. Cerone (Ed.), 3rd International Workshop on Cognition: Interdisciplinary Foundations, Models and Applications (CIFMA), 107-116. Switzerland: Springer Nature.

Simplicity Theory (ST) is a cognitive theory based on the following observation:

Simplicity Theory (ST) is a cognitive theory based on the following observation:

human individuals are highly sensitive to any discrepancy in complexity.

Their interest is aroused by any situation which appears "too simple" to them.

(narrative) Interest

Relevance

Emotional Intensity.

ST’s central result is that Unexpectedness is the difference

between expected complexity and observed complexity.

One important consequence is the formula

p = 2-U, which states that

ex post probability p depends,

not on complexity, but on unexpectedness U, which is a difference in complexity.Contents

Context

The scientific study of the determining factors of human interest may have been hindered by the tacit assumption that these factors are way too complex, multiple and fuzzy to be properly modelled. The results presented in these pages were obtained thanks to the converse assumption: our minds would be much simpler than commonly believed. Ironically, simplicity plays a major role in the theory presented here. Our minds seem to possess the amazing ability to monitor the complexity of some of their own processes. Here, complexity has to be taken in its technical acceptation (size of minimal description).

Significance for language and cognitive sciences

Simplicity Theory (ST)

if it is simpler (=less complex)

to describe than to generate.

All terms are important here.

Formally:

Generation complexity Cw(s) is the complexity (minimal description) of all parameters that have to be set for the situation s to exist in the "world". For example, the complexity of generating the fact that you reach me by chance, knowing that you have to cross five four-road junctions to do so, is 5 × log2 3 = 7.9 bits.

Generation complexity Cw(s) is the complexity (minimal description) of all parameters that have to be set for the situation s to exist in the "world". For example, the complexity of generating the fact that you reach me by chance, knowing that you have to cross five four-road junctions to do so, is 5 × log2 3 = 7.9 bits.

The W-machine (or World-machine) is a computing machine that represents the way the observer represents the world and its constraints.

Description complexity C(s) is the length of the shortest available description of s (that makes s unique).

Description complexity C(s) is the length of the shortest available description of s (that makes s unique).

This notion corresponds to the usual definition introduced in the years 1960 by Andrei Kolmogorov, Gregory Chaitin and Ray Solomonoff. However, we must instantiate the machine used in that definition: C(s) corresponds to the complexity of cognitive operations performed by an observer.

We may consider C(s) as the length of a minimal program that the O-machine can use to generate s.

The O-machine (or Observation-machine) is a computing machine that can rely on all cognitive abilities and knowledge of the observer.

Caveat: The main problem when beginning with ST is that one fails to consider events, i.e. unique situations.

For instance, you may think that a window is a simple object, because it is easy to describe. Indeed! But that window over there is

complex, precisely because it looks like any other windows. To make it unique, I need some significant amount of information in

addition to the fact that it is a window (see Conceptual Complexity).

Similarly, consider the number 131072. There is a difference between merely saying that it is a number and producing a way of distinguishing it from all other numbers. Kolmogorov complexity corresponds to the latter action. Describing a number supposes that one eventually can reconstitute all its digits (e.g. by saying that it’s 2^17).

Predictions: Some phenomena explained by ST

And also:

Relevance

Probability, Simplicity and Information

A new definition of probability

This formula indicates that human beings assess probability through complexity, not the reverse. Though the very notion of probability is dispensable in Cognitive Science, the preceding formula is important to account for the many ‘biases’ that have been noticed in human probabilistic judgment (Saillenfest & Dessalles, 2015).Cognitive Information

Emotional intensity, Moral judgments, Responsibility

This observation has far-reaching consequences. Here are a few of them.

Logic, independence, causality

Frequently asked questions

ST considers a resource-bounded version of Kolmogorov complexity, which is computable.

See the Observation machine.

Note also that probability theory relies on Set theory. But most sets considered in probabilistic reasoning are not computable

(e.g. the set of all people who got a flu).

Answer in the Pisa Tower effect.

Such "situations" are frequent. Each instance is complex, as you need information to discriminate it among all instances to make it an event.

See Caveat above. See also the Conceptual Complexity page.

‘Unexpected’ does not mean ‘unpredicted’. See the Lunar Eclipse example.

Depending on the predicates involved, part of the computation may migrate between

the generation side and the description side (leaving the difference constant).

See the Inverted Stamp example.

When statistical distributions are available, unexpectedness equals cross-entropy.

Prefix-free codes are used to communicate information.

Here, we need to use the standard definition of complexity, in which code ‘words’ are externally delimited.

Yes, indeed. This is not a problem, as p corresponds to ex-post probability.

For instance, two unremarkable lottery draws may both have ex-post probability close to 1.

Only strictly ex-ante probabilities are supposed to sum to 1.

Links

MOOC

Further reading